Contrary to popular belief, technical SEO isn’t too challenging once you get the basics down; you may even be using a few of these tactics and not know it.

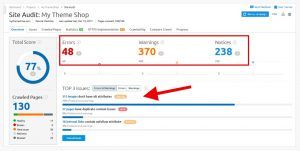

However, it is important to know that your site probably has some type of technical issue. “There are no perfect websites without any room for improvement,” Elena Terenteva of SEMrush explained. “Hundreds and even thousands of issues might appear on your website.”

For example, over 80% of websites examined had 4xx broken link errors, according to a 2017 SEMrush study, and more than 65% of sites had duplicate content.

Ultimately, you want your website to rank better, get better traffic, and net more conversions. Technical SEO is all about fixing errors to make that happen. Here are 12 technical SEO elements to check for maximum site optimization.

1. Identify crawl errors with a crawl report

One of the first things to do is run a crawl report for your site. A crawl report, or site audit, will provide insight into some of your site’s errors.

You will see your most pressing technical SEO issues, such as duplicate content, low page speed, or missing H1/H2 tags.

You can automate site audits using a variety of tools and work through the list of errors or warnings created by the crawl. This is a task you should work through on a monthly basis to keep your site clean of errors and as optimized as possible.

2. Check HTTPS status codes

Switching to HTTPS is a must because search engines and users will not have access to your site if you still have HTTP URLs. They will get 4xx and 5xx HTTP status codes instead of your content.

A Ranking Factors Study conducted by SEMrush found that HTTPS now is a very strong ranking factor and can impact your site’s rankings.

Make sure you switch over, and when you do, use this checklist to ensure a seamless migration.

Next, you need to look for other status code errors. Your site crawl report gives you a list of URL errors, including 404 errors. You can also get a list from the Google Search Console, which includes a detailed breakdown of potential errors. Make sure your Google Search Console error list is always empty, and that you fix errors as soon as they arise.

Finally, make sure the SSL certificate is correct. You can use SEMrush’s site audit tool to get a report.

3. Check XML sitemap status

The XML sitemap serves as a map for Google and other search engine crawlers. It essentially helps the crawlers find your website pages, thus ranking them accordingly.

You should ensure your site’s XML sitemap meets a few key guidelines:

- Make sure your sitemap is formatted properly in an XML document

- Ensure it follows XML sitemap protocol

- Have all updated pages of your site in the sitemap

- Submit the Sitemap to your Google Search Console.

How do you submit your XML Sitemap to Google?

You can submit your XML sitemap to Google via the Google Search Console Sitemaps tool. You can also insert the sitemap (i.e. https://ift.tt/1yk2Rmx) anywhere in your robots.txt file.

Make sure your XML Sitemap is pristine, with all the URLs returning 200 status codes and proper canonicals. You do not want to waste valuable crawl budget on duplicate or broken pages.

4. Check site load time

Your site’s load time is another important technical SEO metric to check. According to the technical SEO error report via SEMrush, over 23% of sites have slow page load times.

Site speed is all about user experience and can affect other key metrics that search engines use for ranking, such as bounce rate and time on page.

To find your site’s load time you can use Google’s PageSpeed Insights tool. Simply enter your site URL and let Google do the rest.

You’ll even get site load time metrics for mobile.

This has become increasingly important after Google’s roll out of mobile-first indexing. Ideally, your page load time should be less than 3 seconds. If it is more for either mobile or desktop, it is time to start tweaking elements of your site to decrease site load time for better rankings.

5. Ensure your site is mobile-friendly

Your site must be mobile-friendly to improve technical SEO and search engine rankings. This is a pretty easy SEO element to check using Google’s Mobile-Friendly Test: just enter your site and get valuable insights on the mobile state of your website.

You can even submit your results to Google to let them know how your site performs.

A few mobile-friendly solutions include:

- Increase font size

- Embed YouTube videos

- Compress images

- Use Accelerated Mobile Pages (AMP).

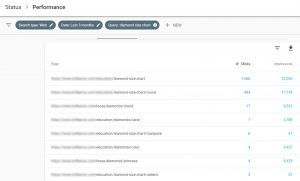

6. Audit for keyword cannibalization

Keyword cannibalization can cause confusion among search engines. For example, if you have two pages in keyword competition, Google will need to decide which page is best.

“Consequently, each page has a lower CTR, diminished authority, and lower conversion rates than one consolidated page will have,” Aleh Barysevich of Search Engine Journal explained.

One of the most common keyword cannibalization pitfalls is to optimize home page and subpage for the same keywords, which is common in local SEO. Use Google Search Console’s Performance report to look for pages that are competing for the same keywords. Use the filter to see which pages have the same keywords in the URL, or search by keyword to see how many pages are ranking for those same keywords.

In this example, notice that there are many pages on the same site with the same exact keyword. It might be ideal to consolidate a few of these pages, where possible, to avoid keyword cannibalization.

7. Check your site’s robots.txt file

If you notice that all of your pages aren’t indexed, the first place to look is your robots.txt file.

There are sometimes occasions when site owners will accidentally block pages from search engine crawling. This makes auditing your robots.txt file a must.

When examining your robots.txt file, you should look for “Disallow: /”

This tells search engines not to crawl a page on your site, or maybe even your entire website. Make sure none of your relevant pages are being accidentally disallowed in your robots.txt file.

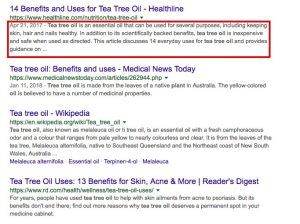

8. Perform a Google site search

On the topic of search engine indexing, there is an easy way to check how well Google is indexing your website. In Google search type in “site:yourwebsite.com”:

It will show you all pages indexed by Google, which you can use as a reference. A word of caution, however: if your site is not on the top of the list, you may have a Google penalty on your hands, or you’re blocking your site from being indexed.

9. Check for duplicate metadata

This technical SEO faux pas is very common for ecommerce sites and large sites with hundreds to thousands of pages. In fact, nearly 54% of websites have duplicate metadata, also known as meta descriptions, and approximately 63% have missing meta descriptions altogether.

Duplicate meta descriptions occur when similar products or pages simply have content copied and pasted into the meta descriptions field.

A detailed SEO audit or a crawl report will alert you to meta description issues. It may take some time to get unique descriptions in place, but it is worth it.

10. Meta description length

While you are checking all your meta descriptions for duplicate content errors, you can also optimize them by ensuring they are the correct length. This is not a major ranking factor, but it is a technical SEO tactic that can improve your CTR in SERPs.

Recent changes to meta description length increased the 160 character count to 320 characters. This gives you plenty of space to add keywords, product specs, location (for local SEO), and other key elements.

11. Check for site-wide duplicate content

Duplicate content in meta-descriptions is not the only duplicate content you need to be on the lookout for when it comes to technical SEO. Almost 66% of websites have duplicate content issues.

Copyscape is a great tool to find duplicate content on the internet. You can also use Screaming Frog, Site Bulb or SEMrush to identify duplication.

Once you have your list, it is simply a matter of running through the pages and changing the content to avoid duplication.

12. Check for broken links

Any type of broken link is bad for your SEO; it can waste crawl budget, create a bad user experience, and lead to lower rankings. This makes identifying and fixing broken links on your website important.

One way in which to find broken links is to check your crawl report. This will give you a detailed view of each URL that has broken links.

You can also use DrLinkCheck.com to look broken links. You simply enter your site’s URL and wait for the report to be generated.

Summary

There are a number of technical SEO elements you can check during your next SEO audit. From XML Sitemaps to duplicate content, being proactive about optimization on-page and off is a must.

from Search Engine Watch https://ift.tt/2KVRAWv

No comments:

Post a Comment