Are you struggling to get website traffic from Google?

If so, you’re not alone. One report found that 91% of content gets zero organic traffic. It’s easy to feel frustrated if you fall within that range, especially if you’ve executed an SEO strategy you hoped would work.

However, you don’t need to overhaul your entire approach to SEO to start seeing results. Instead, you can run experiments to determine the optimization tweaks you need to make, without:

- Changing your entire website

- Sacrificing the (albeit small) number of rankings you’ve got

The answer? A/B SEO testing.

In this guide, we’ll explain why A/B testing is important, the SEO elements you can split test, and a handful of companies who’ve run controlled experiments that resulted in increased search traffic.

Let’s dive in.

What Can I A/B Test?

There are various on-page SEO elements that could form the foundation of your A/B experiments, such as:

- Meta titles and descriptions

- URL structures

- Headlines

- Calls to action (button shapes, colors and text)

- Sales copy

- Product descriptions

- Images or videos

However, The Sleep Judge’s SEO Manager, Roman Kim, thinks that:

“Rewriting large parts of existing content would not be a very good test, as it would invoke Google's content freshness algorithms, so it would not be a fair test against control pages that did not receive updates.”

Let’s put that into practice. Say you’re split testing your product descriptions. You could run a controlled experiment that shows different versions of a webpage to different control groups. Group A sees your standard page with a 150-word product description; Group B is redirected to a duplicate of the page with a 400-word product description.

This type of SEO A/B test allows you to determine the ideal length of your product description before committing to writing an extra 150 words for each product — without knowing whether it’s worth it.

The same concept applies to split testing your meta titles and descriptions. You could simply group several URLs into two categories — one with the word “buy” in the meta title and one without — to see whether that phrase impacts key SEO metrics (such as CTR or ranking position).

In fact, Etsy did an SEO test similar to this. They changed the title tag for specific pages on their website, then monitored the effect on website visits:

The experiment had mixed results, with some control groups having a positive impact on visits, and others having minimal (or a negative) impact. But the point is: they wouldn’t have known unless they tested.

Dive Deeper:

- How to Run A/B Tests that Actually Increase Conversions

- 5 Important Landing Page Elements You Should Be A/B Testing

- 9 Effective SEO Techniques to Drive Organic Traffic in 2019

- 7 SEO Hacks to Boost Your Ranking in 2019

- How to Create CTAs that Actually Cause Action

Why Bother with SEO Testing?

That’s a smart question to ask because nobody wants to put more work on their plate, right?

Well, split testing your SEO strategy saves time and money. Yes, sitewide changes is an investment, but an SEO strategy that isn’t working needs fixing. And that’s also a huge risk: Changing something across your entire site without understanding the full potential (or expected) impact could make you lose the SEO results you’ve already got. Even if you only have 50 keyword ranking positions, you don’t want to lose them.

A/B tests also protect your website from being negatively impacted by something outside of your control. That’s important in the SEO world where Google can change their “best practices” almost overnight. This happened recently with the EAT (Expertise, Authoritativeness, Trust) release — it caused several well-known sites in the healthcare industry to lose half of their organic traffic:

If you’re aware of those algorithm updates and split test the features being prioritized in the new release, you can clearly understand how your website would be impacted — rather than sit around and wait to see…by which time it might be too late.

Start by Picking Your Hypothesis

Every A/B test should start with a hypothesis — a single statement that explains the result you expect the achieve. It’s usually an if/then statement, such as:

- “If my meta descriptions include a power word, then my organic CTR will increase”

- “If I include more videos on my page, then my bounce rate will decrease”

- “If I shorten my URL structure, then Google will be able to understand my page’s content more easily, and therefore rank my shorter URLs higher in the SERPs”

A hypothesis is important for your A/B test because it gives you a thorough understanding of the results you want to see. By focusing on the expected outcome, you’re testing to see whether your initial expectations (your hypothesis) is accurate.

How to Run an A/B SEO Test

Now that you’ve got your hypothesis, you’re ready to prove it correct. But how exactly do you run an A/B SEO test that doesn’t fail miserably or leave you with inaccurate results?

Here are three techniques you can use:

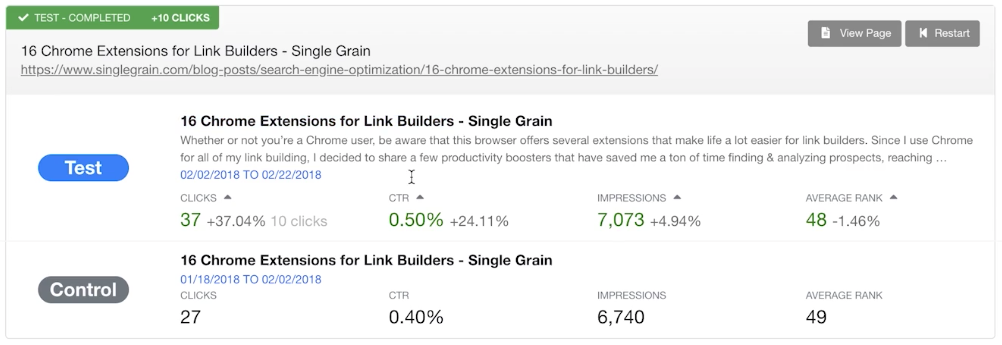

1) Clickflow

Clickflow is a tool designed to make SEO experimentation easier:

It syncs with your Google Search Console account to find opportunities where there’s a high impression count but a low click-through rate. Essentially, this means that your content is being displayed in the SERPs, but nobody’s clicking your result. They’re heading to the other listings, instead.

To start a new SEO test using Clickflow, simply head to the New Test button and select whether you’re testing a single URL or a group of URLs. You’ll then need to name your test and add the pages you want to test:

You can then run the test on those specific pages to assess your hypothesis.

For example, when you add more images onto the page, does your click-through rate increase? And when you include more internal links on a blog post, does the page rank higher in Google?

You can find the answers to your test in the reports section:

The best part about Clickflow? It suggests SEO experiments that you might’ve missed by looking at the average CTR for your top-performing pages and highlighting the potential clicks and revenue you could gain by improving important SEO metrics on one page:

2) Google Optimize

Chances are, you already have a Google Analytics account that you’re using to track the results of your SEO strategy. You could build on this by using Google Optimize, a tool that works directly with Google Analytics to run A/B tests on a specific website:

Austin Shong of Blip Billboards explains:

“Google Optimize is a great free tool for running A/B tests. I'm always tweaking something on pages and seeing if there is a difference. When it's small things like the copy on a button or a headline, you can make the change in Google Optimize's tool without having to edit the actual page.”

Spotify used this tool to A/B test the content of their landing pages. They used Google Analytics data to discover that customers in Germany were more interested in audiobooks than traditional playlists.

They changed a landing page using Optimize to show the collection of audiobooks they had on offer, and targeted Google Ad click traffic to drive customers from Germany towards the URL. This resulted in a 24% uplift in revenue:

Dive Deeper:

- 6 Ways Google AdWords Can Boost Your SEO Results

- How to Lower the Cost of Google Ads and Get Better Conversions

- What Gets Measured Gets Managed: How to Use Metrics to Boost Performance

- 9 Mission-Critical Lead Generation Metrics You Need To Track

- Creating Google Analytics Funnels and Goals: A Step-by-Step Guide

3) Google Ads

SEO can take a long time to pay off, so you might be waiting a while for results to start coming in before you find the winner. But who said that SEO split testing had to rely completely on organic strategies?

Danilo Godoy of Search Evaluator uses Google Ads data to run his split tests before mapping the changes over to organic efforts:

“While the results of an A/B test for paid marketing can generally be assessed within a few days, SEO is a long game, [in] which [the] rules are more complex and constantly evolving — Google deploys minor changes in the algorithm daily.

For that reason and [because] time and budget are precious things, my recommendation is that businesses save A/B tests for PPC (e.g. Google Ads) and apply what they've learned from paid advertising in their SEO strategy.

So, for example, if you want to check which page title is more attractive (i.e. receives more user clicks), this can be easily and quickly A/B tested on Google Ads and then deployed in your SEO strategy.

Trying to apply these simple [tests directly] through SEO can be a waste of time since the multitude of variables involved in ranking could impact the results of your findings.”

The simplest way to do this is through Google Ad variations to change the text for your campaigns or swap the headlines — allowing you to A/B test which type of copy works best:

You might find that a headline containing the word “2019” has a much higher CTR and conversion rate than one without. It makes sense to edit your existing (organic) page titles to include that text, right?

When Should You Stop Your A/B Test?

Your A/B test has been running for a while, yet you’re unsure on when you should hit the “stop” button and analyze the results. Rightly so. Drawing an end to your experiments too late or too early runs the risk of:

- Wasting time (if the result is proven and reliable, there’s no need to continue testing)

- Not retrieving accurate data, particularly if the sample size isn’t big enough.

You can use the following techniques to determine when to stop your A/B test:

P-Value

You’ve started your test and there’s been a small volume of people viewing the variations of your web page. To quickly understand whether the experiment will be a success, you can calculate the p-value of your A/B test.

This simply calculates the probability value of your hypothesis being accurate. The value range is between 0 and 1, with a lower score indicating that there is strong evidence against the null hypothesis.

Typically, a p-value of less than 0.05 indicates strong evidence against your hypothesis. A larger p-value of more than 0.05 means there’s a strong chance your hypothesis is true:

For example, if you’re testing to see whether the word “buy” increases the CTR in your meta titles, your null hypothesis would be an increase in CTR. Your alternative hypothesis is that it doesn’t increase CTR.

So you randomly sample a handful of meta titles and find that those including the word “buy” have a higher CTR. Your p-value turns out to be 0.005, which doesn’t really prove that the edited versions always have a higher CTR.

However, if your p-value is 0.7, it’s safe to say that your null hypothesis of an increase CTR is correct, and therefore your experiment will likely continue to show that the word “buy” does increase a meta title’s CTR.

Statistical Significance

It’s worth noting that the p-value of your experiment isn’t the end result. You’ll need to prove that the probability value is, in fact, accurate before calling out a winner. But it’s tricky to know when to call it a day.

The majority of A/B tests stop after a certain period of time — whether that’s a week, a month or a quarter. When that timeframe has been reached, the testing stops and the team behind the experiment analyzes which control won.

However, you shouldn’t run your SEO split test for a set period of time. There are thousands of things that contribute to the testing timeframe guidelines, including your website’s conversion rate and Google’s algorithm releases (if any), which makes it almost impossible to set a period of time exclusively for your test.

When Pinterest ran their SEO experiment, they learned that:

“In most cases we found that the impact of an experiment on traffic starts to show as early as a couple of days after launch. The difference between groups continues to grow for a week or two until it becomes steady.

Knowing this not only helps us ship successful experiments sooner, but also helps us turn off failing experiments as early as possible. We learned this the hard way.”

You could do the same thing, with the only difference being that you stop SEO A/B testing when the results are statistically significant.

An SEO experiment said to have statistical significance means that the end results aren’t biased or inaccurate. It’s a way of proving that, mathematically, the result you’ve recorded is reliable, and largely depends on your sample size. So if you run the test with a statistical significance level of 98%, you can be sure that the results are accurate and true — and that your result isn’t being skewed by an anomaly.

Google recommends this approach, too:

“The amount of time required for a reliable test will vary depending on factors like your conversion rates and how much traffic your website gets; a good testing tool should tell you when you’ve gathered enough data to draw a reliable conclusion.”

So, at what statistical significance rate should you call it a day? The answer depends massively on your sample size.

Let’s say, for example, that you ran an SEO test that measures the change in CTRs of your landing page by changing the color of your call to action buttons. Here are the results of your experiment:

- Group 1 (red button): 100 visitors clicked through, but 800 visitors landed on the page. This is a CTR of 12.5%

- Group 2 (green button): 3 visitors clicked through, but 15 visitors landed on the page. This is a CTR of 20%.

If you were solely using CTR as a measure success, you’d say that Group 2 had a much higher CTR — therefore, the green button wins.

The problem with that, however, is that there were significantly less people exposed to the green button than the red button. Because the sample size is small, the test is not significantly significant. The small number of people viewing the green button might be biased. So the experiment isn’t over. You need more people to be exposed to the green button before calling it a winner.

Take a look at the metric variation between each result. Before automatically labelling the control with the higher metric as the winner, take a look to see whether the test was significantly significant both in terms of sample and control size.

Sam Orchard of Edge of the Web explains:

“Don’t just test between two, or even a few pages, as the results won’t be meaningful. Instead, test between two different groups of random pages on your website – the control group and then the group of pages you’re going to change.”

Only once each result is statistically significant can you stop the test and analyze which variation performed best.

CausalImpact Reports

You could also assess whether your A/B test has been a success by using CausalImpact reports, as Mark Edmondson explains:

“CausalImpact is a package that looks to give some statistics behind changes you may have done in a marketing campaign. It examines the time-series of data before and after an event, and gives you some idea on whether any changes were just down to random variation, or the event actually made a difference.”

This technique is more technical and you might need to enlist the help of a developer or technical SEO to assist you, but CausalImpact reports do have the ability to dive deeper into your results, and accurately prove your hypothesis.

How to Scale Your A/B SEO Tests

We’ve already touched on the fact that experiments should be stopped when the results are statistically significant. But when you’re running A/B SEO tests on a large scale — potentially a site with thousands of URLs — it can be tough to keep track of your results.

So you should focus on scalability, as one research paper states:

“The two core tenets of the platform are trustworthiness (an experiment is meaningful only if its results can be trusted) and scalability (we aspire to expose every single change in any product through an A/B experiment).”

The easiest way to do this is through Clickflow’s group URL testing feature. Instead of manually entering a bunch of URLs you want to split test, you include a string included in all of the URLs you want to test.

For us, that’s any URL with the word “SEO”:

Dive Deeper:

- How to Use Predictive Analytics for Better Marketing Performance

- How to Optimize Your Site for Search Ranking with Your Web Analytics Data

- 20 AI Tools to Scale Your Marketing and Improve Productivity

- [Growth Study] Slack: The Fastest Business App Growth in History

3 Best Practices for A/B Testing

Before you dive in with your first split test, you’ll need to lay out some ground rules. After all, you don’t want your experiments to be a waste of time.

Here are three things you’ll need to remember throughout your tests:

1) Isolate the Elements You’re Testing

It’s easy to get carried away with your split testing and try to measure the impact of several different on-page elements. You want to see what works as quickly as possible, right? So try to avoid the rush, and isolate your tests.

Running several A/B tests at the same time could skew your results, as Dave Hermansen notes in his explanation of the approach they use at Store Coach:

“We only test one thing at a time so that there is no noise and we can tell if it was that one thing that made a positive or negative difference.

We let the test run for a decent amount of time — usually at least 1,000 impressions (the more the better) — so that we can feel more assured that any changes in rankings, click through rate or conversions were due to the thing being tested and not just a fluke.”

Sure, you can run several A/B SEO tests, but make sure you pick just one element you want to test and run that experiment in isolation.

2) Avoid Cloaking Google Bots

The entire foundation of A/B testing is displaying different versions of the same webpage to groups of people and judging how they interact with each. The page you’re showing isn’t exactly the same — which makes it easy for you to land in hot water.

Google strictly forbids cloaking — which is the act of showing different webpages (and content) to search engine spiders and your human visitors:

So how can you avoid landing in Google’s bad books when the entire point of A/B SEO testing is to create multiple versions of the same page? The answer is simple: Don’t index the duplicate pages.

When you’ve got several copies of the same page, Google bots won’t know which is the real one. They might think your original page is for humans and your test page is built solely for bots — meaning you’re cloaking them. However, de-indexing the new test page (or adding canonical tags to the original) will ensure that Google knows which page is the right one.

3) Build an A/B Testing Mindset

The truth is that running split tests can be scary, especially if you’re running them on a huge website with lots of potential for things to go wrong.

In LinkedIn’s write up of the common A/B testing challenges in social networks, they stated that:

“Running large scale A/B tests is not just a matter of infrastructure and best practices; establishing a strong experimentation culture is also key to embedding A/B testing as part of the decision making progress.”

To make SEO split-testing work for you, you need to practice. Provide your team with lots of opportunities to run experiments — so long as they’re done correctly and safely. As LinkedIn explained, you need to build an experimentation culture.

Thumbtack, who runs 30 A/B tests per month, does this effectively. They build a split-testing mindset into their team by encouraging half of their in-house engineers to own at least one A/B test within their first six months through:

- Hosting hour-long, monthly workshops on A/B testing for their new team members

- Intensive documentation, shared on a company drive, which details how they run tests

- Providing sample size calculation tools for staff to understand the expected outcome of their experiments

- Asking team members with experience in split testing to coach their new engineers as they complete their own experiments

Not only does this help boost the confidence and willingness of their team, but the constant reinforcement of “we need to test regularly” could lead to incredible results.

Final Thoughts

As you can see, split testing various SEO strategies is complex — but worth its weight in gold when you hit the jackpot and move up from the low keyword ranking positions you’ve been stuck in.

Remember to start with your hypothesis, pick an approach that works depending on the elements you’re testing, and avoid cloaking Google bots. You don’t want to land a nasty penalty in the meantime!

The post What Is A/B SEO Testing? appeared first on Single Grain.

from Single Grain https://ift.tt/2FQXxnx

No comments:

Post a Comment