Search is a never-ending story. A search engine is never done fine-tuning the way they get their results to you in a way that makes sense for them and for you. First, Google was all about digging through loads of information to find what you need, but now, AI increasingly helps them make sense of our world in all its complex glory. Here’s what’s coming soon as presented in yesterday’s Google Search On event.

What is search in 2020?

Google has come a long way since that old algorithm of ranking pages Sergei Brin and Larry Page introduced in 1998. At that time, though, the internet was just starting and it was a much simpler place. What’s more, the world itself was a much simpler place. Today, many of the things we take for granted are being questioned as some people continue to sow distrust and spread rumours, fake news and ‘alternative facts’.

In 2020, information is everywhere, and it’s available on more platforms and devices than ever. A search engine in 2020 is no longer a tool to find an answer to your question, but also a window to the world. Search is text, search is visual, search is voice — and search is moving into the third dimension with AR. A search engine is also a provider of important information regarding world events like COVID-19 and the US elections.

A search engine in 2020 is looking to understand the world at a level not seen before. New layers are being added to the knowledge graph to help understand knowledge, respond with valuable responses and present increasingly complex material to the searcher. Data set search with graphs made especially for your search? You got it. Understanding atoms by projecting a 3D model on your kitchen table? Of course, why not? Humming that song you have stuck in your head to find out what it is? Maybe a niche thing, but you can.

Increasingly, search is a combination of the offline and online world. This was one of the things that stood out at yesterday’s Google Search On event.

Understanding language

All of this starts with a thorough understanding of language. A while back, Google launched an incredible new NLP technology called BERT. BERT is unique, because it considers the full context of a word by looking at the words that come before and after it. This helps Google understand the intent behind searches. BERT was launched to great success and will now be used for almost every search in the English language, with many languages following.

Google also introduced the biggest improvement of their spelling algorithm in the last five years. They’ve built a deep neural net that gives them more power to decipher more complex misspellings and present the corrections in record time.

Ranking passages

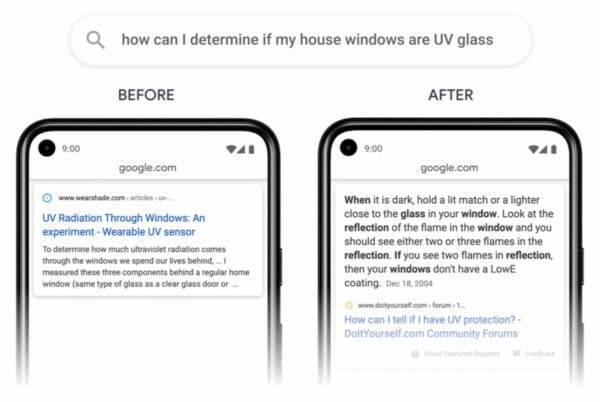

Search isn’t all about finding a page that completely answers your search query. Sometimes, what you want is an answer to a question so specific that it’s merely hinted to on a page. To help find those needles in a haystack, Google will now start ranking passages from pages and make these available in the search engine. By analyzing these separately, Google can answer an even broader set of answers. This is targeted at a fairly small number of searches, about 7%.

Understanding topics and subtopics

AI also helps Google differentiate between topics and subtopics. This means that Google can now make search results more helpful by dividing them in different subtopics, based on what it knows about that broad search term. In Google’s example, the broad search term home exercise equipment will now be followed by more specified subtopics like budget home exercise equipment or small space home fitness equipment, making it easier to pick the results to your liking.

Understanding video

Video is a huge deal on the web and it is only going to become more important as we go along. For years, Google has been using transcriptions to get an idea of what a video is about. This year, they are betting on AI to help them gain more and better insights into video. Not just, what’s said when, but also about the semantics. Google can now identify the key moments in a video and let’s jump there with a single click.

More ways to search

Search is no longer only about entering a keyphrase in a search box. Today, search is everywhere and the possibilities are growing. In yesterday’s event, Google shared new ways to search and interact with their Lens app, for instance. Not only can you take a picture of a piece of text in a foreign language to get it translated, but the app can now also pronounce it for you. Google is filling the knowledge graph with all sorts of learning elements so it will also let you solve math problems, for instance. No cheating on that test, though!

Google Lens can also help you shop. Simply upload the photo of a product you like and the app will give you suggestions. Here, AR will also come into play. Increasingly, augmented reality will help you look at the product you like in a 3D space. Be it a cool sweater or that new car you’ve been looking at.

Data set search thanks to Data Commons

Google is opening up a huge data set for the world to use. The Data Commons Project is an open knowledge database filled with statistical data from different reputable sources. All this data is now available in Google Search. A new data layer in the knowledge graph helps you find statical data that’s otherwise hard or impossible to find. It even makes graphs for you on the fly.

Google uses its natural language processing skills to understand the question you are asking and to find the data aswering that question. Of course, you can also run comparisons to other data points.

What this means for the future

From the looks of it, it almost seems as if Google has been fitted with a new brain. In increasing the use of AI in their processes, they have achieved an unprecedented understanding of not just language, but the world around us. Google is using those insights to provide us with simple answers to ever-more complex questions, from math problems to working with big data sets.

As for SEO, since Google is becoming much better at understanding language and topics, it will also be better at spotting high-quality content. Focusing on quality and investing in fast and flawless website will continu to be the main focal point for you. It also interesting to see how the indexing of passages will turn out as this might seem like some kind of super powered featured snippet. In general, the search results might become a bit more diverse in the coming months.

I asked our resident SEO expert Jono Alderson what he thought of the event:

“It’s important to remember that Google’s core objective has never been to return a list of search results when you type something in. Their goal has been to solve the problems of their users. What we’re seeing here is a huge step forward in that mission. Google is increasingly acting as our personal assistant, and ‘conventional search’ is just one small part of that. That means that as SEO folks, webmasters, and business owners, we need to be thinking not just about ‘how do I rank higher?’, but, ‘how do I solve the problems of Google’s users?’. That’s about content strategy, differentiation, and building brands.”

Jono Alderson

That’s it for the Google Search On event

The evolution of Google is mighty interesting. Today, Google is like an incredible powerful brain that is at your disposal. You can ask it very complex questions and you get results you can use to do your work, shopping, learning or research. You can use your voice or vision to search and you can ‘touch’ objects generated in AR for you.

Who would have thought that in 1998?

Search in 2020 is extremely powerful.

The post Google Search On 2020 event: AI improvements for search appeared first on Yoast.

from https://ift.tt/37e68yM

No comments:

Post a Comment